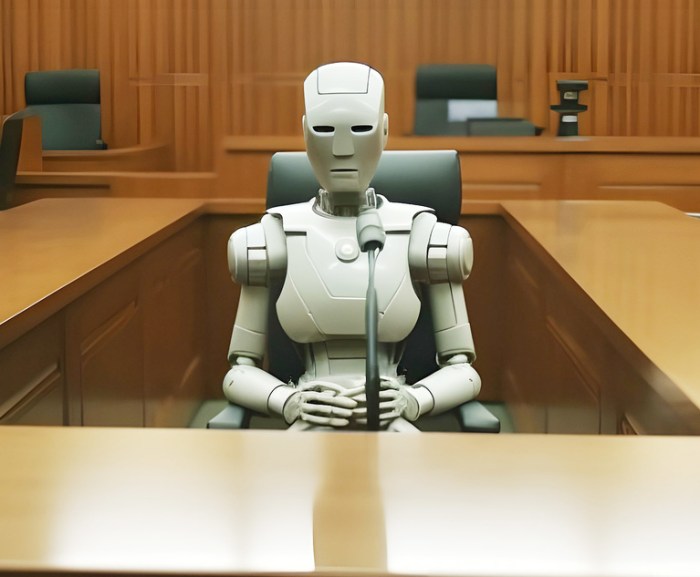

Generative ai courts law justice – Generative AI: Courts, Law, Justice – it sounds like a sci-fi movie plot, right? But the reality is, this powerful technology is already starting to reshape the legal landscape. From speeding up legal research to potentially automating entire processes, generative AI is raising exciting (and slightly terrifying) questions about the future of law. This isn’t just about efficiency; it’s about access to justice, fairness, and the very nature of legal decision-making. We’re diving deep into the implications, exploring the potential benefits and the serious ethical concerns that come with it.

This exploration covers the use of generative AI in legal research, document drafting, judicial decision-making, and the broader ethical and societal implications. We’ll unpack how this technology can boost efficiency, but also highlight the potential pitfalls, like algorithmic bias and the risk of misinterpretations. We’ll also look at the future of the legal profession and how generative AI might transform it – from courtroom proceedings to legal education.

Generative AI in Legal Research: Generative Ai Courts Law Justice

The legal profession, traditionally reliant on meticulous manual research, is undergoing a significant transformation thanks to the advent of generative AI. These powerful tools are poised to revolutionize how lawyers conduct research, analyze case law, and draft legal documents, offering both unprecedented speed and potential for improved accuracy. However, as with any technological advancement, it’s crucial to understand both the benefits and the limitations before fully embracing this new frontier.

Accelerating Legal Research Processes with Generative AI

Generative AI significantly accelerates legal research by automating several time-consuming tasks. Instead of manually sifting through countless documents and case laws, lawyers can use AI to quickly identify relevant precedents, statutes, and regulations. AI algorithms can analyze vast amounts of legal text in seconds, extracting key information and summarizing complex legal arguments, freeing up lawyers to focus on higher-level strategic thinking and client interaction. For instance, an AI could quickly identify all cases involving a specific legal principle within a specific jurisdiction, a task that might take a human researcher hours or even days. This speed advantage translates directly into increased efficiency and cost savings for law firms.

Benefits and Drawbacks of Generative AI in Legal Document Review

Using generative AI for legal document review presents several compelling advantages. AI can quickly scan and analyze large volumes of documents, flagging potentially relevant information and highlighting inconsistencies or discrepancies. This automated review process can significantly reduce the time and resources required for due diligence, contract review, and discovery. However, drawbacks exist. The accuracy of AI-generated summaries and analyses depends heavily on the quality of the training data. Biases in the data can lead to inaccurate or incomplete results. Furthermore, over-reliance on AI could lead to a decline in critical thinking skills among legal professionals. Human oversight remains crucial to ensure accuracy and contextual understanding. For example, an AI might miss a crucial nuance in a contract clause that a human lawyer would readily identify.

Comparison of Traditional and AI-Powered Legal Research Methods

Traditional legal research methods, primarily involving manual searches through databases and law libraries, are inherently slow and labor-intensive. They require extensive knowledge of legal terminology and research techniques. AI-powered approaches, on the other hand, leverage machine learning algorithms to automate many aspects of the research process, significantly speeding up the process and improving the efficiency of the workflow. While traditional methods offer a deep understanding of the context and nuances of the law, AI offers speed and scalability, making it suitable for high-volume tasks. The ideal approach likely involves a hybrid model, combining the strengths of both traditional and AI-powered methods.

Hypothetical Scenario: Generative AI in Case Law Research

Imagine a lawyer researching the legal precedent on the use of artificial intelligence in autonomous vehicles. Using a generative AI tool, the lawyer inputs s like “autonomous vehicles,” “liability,” and “AI.” The AI then quickly identifies relevant cases from various jurisdictions, summarizing key arguments and rulings. The lawyer can further refine the search by specifying criteria such as the year of the decision or the specific legal issue. This allows the lawyer to quickly gain a comprehensive overview of the relevant case law, saving significant time and effort compared to traditional research methods. The AI might even identify subtle connections between seemingly disparate cases that a human researcher might overlook.

Comparison of Generative AI Tools for Legal Research

| Tool Name | Features | Cost | Limitations |

|---|---|---|---|

| Lex Machina | Predictive analytics, case law analysis | Subscription-based | Limited to specific jurisdictions |

| ROSS Intelligence | Natural language processing, legal research | Subscription-based | Requires specific phrasing for optimal results |

| CaseText | AI-powered legal research platform | Subscription-based | Accuracy depends on data quality |

| Kira Systems | Contract review and analysis | Subscription-based | May require human review for complex contracts |

AI-Driven Legal Drafting and Automation

Source: pixabay.com

Generative AI’s potential in courts is huge, impacting everything from legal research to predictive justice. But even the most advanced algorithms can’t predict the economic fallout of events like the looming El Niño, which, as detailed in this article the looming el nino could cost the world trillions of dollars , could cripple global economies. These unpredictable disasters highlight the need for robust, adaptable legal frameworks—frameworks that AI might help us build, but can’t fully anticipate.

The legal world, often perceived as steeped in tradition, is undergoing a rapid transformation thanks to the advent of generative AI. This technology is no longer a futuristic fantasy; it’s actively reshaping how legal professionals approach drafting, review, and analysis, promising increased efficiency and potentially even greater accuracy. This shift, however, also presents ethical considerations and challenges that need careful navigation.

Generative AI can significantly streamline the process of creating legal documents. Algorithms trained on vast datasets of legal texts can generate initial drafts of contracts, pleadings, and other documents, significantly reducing the time lawyers spend on rote tasks. This allows them to focus on higher-level strategic thinking and client interaction. The AI acts as a powerful assistant, accelerating the drafting process while maintaining a human oversight role.

Examples of Automated Legal Tasks

AI’s capabilities in legal automation extend beyond simple document generation. Several specific tasks can be effectively handled by generative AI systems. For instance, contract review, identifying clauses that need attention or potential conflicts, is a time-consuming process that AI can expedite. Similarly, the creation of standardized legal forms, such as NDAs or simple employment agreements, can be automated, freeing up lawyers for more complex cases. Furthermore, AI can assist in summarizing lengthy legal documents, quickly providing key information for efficient decision-making. Finally, the generation of initial pleadings based on provided facts is another area where AI shows significant potential.

Ethical Considerations in AI-Driven Legal Drafting

The use of AI in legal drafting raises several ethical concerns. One key issue is ensuring the accuracy and reliability of AI-generated documents. Bias in the training data could lead to biased outputs, potentially resulting in unfair or discriminatory outcomes. Furthermore, maintaining attorney oversight and responsibility is crucial. While AI can assist, the final responsibility for the accuracy and ethical implications of a legal document always rests with the lawyer. Transparency about the use of AI in the drafting process is also essential, both to clients and to the courts. Questions of data privacy and security related to the input and output of the AI systems also need careful consideration.

Impact on Legal Profession Efficiency

The integration of generative AI is poised to significantly enhance the efficiency of the legal profession. By automating routine tasks, lawyers can dedicate more time to complex legal analysis, strategic planning, and client communication. This translates to faster turnaround times, reduced costs for clients, and an improved overall client experience. Law firms can handle a larger volume of cases with the same or fewer staff, potentially leading to increased profitability and a more sustainable business model. The potential for increased efficiency is undeniable, but the full extent of its impact remains to be seen as the technology matures and becomes more widely adopted.

Potential Errors and Mitigation Strategies

While AI offers significant advantages, the potential for errors in AI-generated legal documents remains a concern. These errors can stem from biases in the training data, limitations in the AI’s understanding of nuanced legal concepts, or simply unexpected inputs. Mitigation strategies involve rigorous human review and validation of all AI-generated content. Implementing robust quality control checks and using multiple AI systems to compare outputs can help identify and correct potential errors. Continuous monitoring and retraining of the AI models based on feedback and identified errors are also vital to improving accuracy and reliability over time. Furthermore, clear guidelines and protocols for the use of AI in legal drafting should be established within law firms to minimize the risk of errors and ensure ethical compliance.

Generative AI and Judicial Decision-Making

Source: law.com

The integration of generative AI into the judicial system is a rapidly evolving field, promising to revolutionize how judges approach decision-making, while simultaneously raising significant ethical and practical concerns. This technology offers potential benefits in terms of efficiency and consistency, but its deployment requires careful consideration of its limitations and potential biases.

Generative AI’s capacity to process vast amounts of legal data opens exciting possibilities for judicial assistance. Its applications extend beyond simple searches; it can identify patterns in case law, predict outcomes based on similar precedents, and even assist in drafting judicial opinions. However, the reliance on AI for such crucial tasks necessitates a deep understanding of its capabilities and limitations to ensure fairness and transparency.

Potential Applications of Generative AI in Assisting Judges with Decision-Making

Generative AI can offer judges several valuable tools. It can efficiently sift through mountains of case law, identifying relevant precedents and summarizing key arguments far quicker than a human. This enhanced efficiency could significantly reduce the time spent on research, allowing judges to focus on the nuanced aspects of each case. Furthermore, AI can highlight potential biases or inconsistencies in existing legal interpretations, prompting judges to consider alternative perspectives. For example, an AI system could analyze sentencing data to reveal disparities based on factors like race or socioeconomic status, prompting a more equitable approach. Finally, generative AI can assist in drafting judicial opinions, providing a framework for clear and concise articulation of legal reasoning.

Implications of Using AI to Predict Judicial Outcomes, Generative ai courts law justice

Predictive policing models already exist, and the same technology can be applied to predict judicial outcomes. These models analyze vast datasets of case details, including charges, evidence, and prior rulings, to estimate the likelihood of a specific verdict or sentence. While this can offer valuable insights into potential trends and help anticipate caseload demands, the implications are complex. Over-reliance on these predictions could lead to biases, potentially reinforcing existing inequalities. For instance, a model trained on data reflecting historical biases might inadvertently perpetuate those biases in future predictions. Consider a scenario where an AI predicts a higher likelihood of conviction for individuals from a specific demographic, even if the evidence against them is weak. This would raise serious concerns about fairness and due process.

Strengths and Weaknesses of AI-Based Prediction Models in the Context of Judicial Impartiality

AI-based prediction models boast the strength of processing massive datasets, identifying patterns and correlations that might escape human observation. They can provide a statistically-informed perspective on potential outcomes, assisting judges in making informed decisions. However, their weaknesses are equally significant. The accuracy of these models is entirely dependent on the quality and representativeness of the data used to train them. Biased data will inevitably lead to biased predictions, undermining judicial impartiality. Furthermore, the “black box” nature of some AI algorithms makes it difficult to understand the reasoning behind their predictions, hindering transparency and accountability. A judge relying on an opaque AI prediction without understanding its basis could inadvertently introduce bias into their judgment.

Arguments For and Against the Use of Generative AI in Sentencing Guidelines

Arguments for using generative AI in sentencing guidelines center on the potential for increased consistency and fairness. An AI system could analyze vast amounts of sentencing data to identify patterns and disparities, leading to more equitable outcomes. It could also help to standardize sentencing practices across different jurisdictions, reducing inconsistencies. However, the arguments against are equally compelling. Concerns about bias in the training data remain paramount. Furthermore, the use of AI in sentencing could remove the human element of judgment and empathy, leading to overly rigid and inflexible sentencing decisions. Over-reliance on an algorithm could lead to overlooking crucial mitigating circumstances unique to individual cases, ultimately undermining justice. For example, an AI might recommend a harsher sentence for a defendant based on statistical probabilities without considering the defendant’s remorse or rehabilitative potential.

Hypothetical Scenario Exploring the Use of Generative AI to Analyze Case Precedents and Identify Relevant Legal Principles

Imagine a judge presiding over a complex contract dispute involving novel legal issues. Using a generative AI system, the judge could input the relevant facts and legal arguments. The AI could then analyze thousands of past contract cases, identifying similar precedents, summarizing key arguments, and highlighting relevant legal principles. The AI might even identify potential legal loopholes or counterarguments not initially considered by either party. This process would significantly accelerate legal research, enabling the judge to make a more informed and efficient decision. However, it’s crucial to remember that the AI is a tool, not a replacement for human judgment. The judge must critically evaluate the AI’s findings, ensuring they align with the specific facts and context of the current case, and ultimately make the final determination.

Ethical and Societal Implications of Generative AI in Law

The integration of generative AI into the legal field promises efficiency and innovation, but it also raises significant ethical and societal concerns. These concerns aren’t just theoretical; they represent real-world challenges that need careful consideration before widespread adoption. Failure to address these issues could undermine the very principles of justice and fairness that the legal system is designed to uphold.

Bias in AI Algorithms

AI algorithms are trained on data, and if that data reflects existing societal biases (racial, gender, socioeconomic, etc.), the AI will perpetuate and even amplify those biases. In legal contexts, this could lead to unfair or discriminatory outcomes. For instance, an AI-powered tool used for sentencing might recommend harsher penalties for defendants from certain demographic groups if the training data over-represents those groups in crime statistics, regardless of the actual guilt or mitigating circumstances. This isn’t a hypothetical problem; studies have already shown biases in various AI systems used in other sectors, highlighting the urgent need for mitigation strategies in the legal field. Addressing this requires careful curation of training datasets, rigorous testing for bias, and the implementation of mechanisms to identify and correct biased outputs.

Potential for Misuse of Generative AI in the Legal System

The power of generative AI to create convincing but false legal documents presents a significant risk. Malicious actors could use AI to generate fraudulent contracts, forge evidence, or create deepfakes to manipulate legal proceedings. Imagine a scenario where a sophisticated AI generates a seemingly authentic will, leaving a substantial inheritance to a fabricated beneficiary. Detecting such forgeries could prove extremely difficult, requiring advanced forensic analysis and potentially leading to protracted and costly legal battles. Furthermore, the ease with which AI can produce vast quantities of seemingly credible information could overwhelm the system, making it harder to distinguish truth from falsehood.

Transparency and Accountability in AI Development and Deployment

Transparency is paramount. We need to know how AI systems used in legal contexts make their decisions. “Black box” AI, where the decision-making process is opaque, is unacceptable in a system that demands fairness and accountability. Furthermore, clear lines of responsibility must be established. Who is accountable when an AI system makes a mistake that leads to a wrongful conviction or an unjust ruling? Is it the developers, the users, or the institutions that deploy the technology? Developing robust auditing mechanisms and establishing clear liability frameworks are crucial steps towards building trust and ensuring accountability.

Impact of Generative AI on Access to Justice

While AI has the potential to increase access to justice by making legal services more affordable and accessible, it also risks exacerbating existing inequalities. If AI-powered legal tools are only available to those who can afford them, it could create a two-tiered system where the wealthy have access to sophisticated AI assistance while the poor are left behind. This digital divide could further marginalize vulnerable populations and deepen existing disparities in the legal system. Strategies to ensure equitable access, such as providing public access to AI-powered legal tools and investing in digital literacy programs, are crucial to mitigating this risk.

Framework for Ethical Guidelines Governing the Use of Generative AI in the Legal Profession

Establishing a robust ethical framework is critical. This framework should guide the development, deployment, and use of generative AI in the legal profession. Here are some key guidelines:

“AI systems used in legal contexts must be designed and trained to minimize bias and ensure fairness.”

“Transparency and explainability are essential. The decision-making processes of AI systems must be understandable and auditable.”

“Robust mechanisms for accountability must be established to address errors and misuse of AI systems.”

“Efforts must be made to ensure equitable access to AI-powered legal tools, preventing the exacerbation of existing inequalities.”

“Continuous monitoring and evaluation of AI systems are necessary to identify and address emerging ethical challenges.”

The Future of Generative AI in Courts and Justice Systems

Source: martech.org

Generative AI is poised to revolutionize the legal landscape, impacting everything from legal research to courtroom proceedings. Its potential to automate tasks, analyze vast datasets, and enhance decision-making is undeniable, but its integration also presents significant challenges. The coming years will see a dramatic shift in how legal professionals work and how justice is administered.

Generative AI’s Predicted Role in the Legal System

Generative AI will likely play a multifaceted role in the future legal system. Imagine AI assistants helping lawyers draft briefs and contracts with unparalleled speed and accuracy, identifying relevant case law with precision, and even predicting the likelihood of success in various legal strategies. In the courtroom, AI could analyze witness testimonies for inconsistencies, summarize complex legal arguments, and even assist judges in making informed decisions by providing objective analyses of evidence. This shift will demand a new understanding of the relationship between technology and legal practice, with a focus on responsible AI implementation and ethical considerations. The use of AI for predictive policing, already seen in some jurisdictions, is likely to become more sophisticated and widespread, potentially leading to both improved efficiency and increased concerns about bias and fairness.

Transformation of Legal Education and Training

The integration of generative AI will necessitate a significant overhaul of legal education and training. Law schools will need to adapt their curricula to incorporate AI literacy, teaching students how to effectively use and critically evaluate AI tools in their legal work. This will include training on ethical considerations, data privacy, and the potential biases embedded within AI algorithms. Practical skills in prompt engineering, data analysis, and AI-assisted legal research will become essential components of a legal professional’s skillset, alongside traditional legal training. Furthermore, continuous professional development will be crucial, ensuring lawyers can keep pace with the rapidly evolving capabilities of generative AI. For example, programs focusing on AI-driven contract analysis and negotiation could become commonplace.

Challenges in Integrating Generative AI into Legal Infrastructure

Integrating generative AI into the existing legal infrastructure presents several formidable challenges. Data privacy and security are paramount concerns. The use of AI in legal contexts necessitates the handling of sensitive personal information, requiring robust security measures to prevent breaches and misuse. Ensuring the accuracy and reliability of AI-generated legal advice is another critical challenge. AI models are only as good as the data they are trained on, and biases in the data can lead to inaccurate or unfair outcomes. Addressing these biases and ensuring algorithmic transparency will be crucial for maintaining public trust and confidence in the legal system. Furthermore, the legal and ethical frameworks governing the use of AI in legal practice are still evolving, creating uncertainty and the need for careful regulation. The potential for job displacement also necessitates thoughtful consideration and strategies for workforce adaptation.

Innovative Applications in Law Enforcement and Criminal Justice

Generative AI offers innovative applications within law enforcement and criminal justice. AI-powered tools can analyze vast amounts of crime data to identify patterns and predict potential crime hotspots, assisting in resource allocation and proactive policing. In investigations, AI can analyze evidence, such as audio and video recordings, to identify inconsistencies or potential leads. Furthermore, AI can assist in identifying and mitigating biases in policing and sentencing, promoting fairer outcomes. However, the use of AI in these contexts must be carefully monitored to prevent misuse and ensure accountability. The potential for algorithmic bias needs careful consideration, and safeguards must be put in place to prevent discriminatory practices. For example, an AI system analyzing crime data might inadvertently reinforce existing biases if the training data reflects historical inequities in policing.

Generative AI’s Impact on the Legal Job Market and Required Skills

The impact of generative AI on the legal job market will be significant, but not necessarily catastrophic. While some routine tasks may be automated, the demand for legal professionals with advanced skills in AI and data analysis will increase. Future legal professionals will need to be proficient in using AI tools to enhance their efficiency and effectiveness, but also possess critical thinking skills to interpret and evaluate the output of these tools. The ability to understand the limitations of AI and to identify potential biases will be crucial. The legal profession will evolve towards a greater emphasis on strategic thinking, client communication, and complex problem-solving, areas where human expertise remains irreplaceable. The legal job market will likely see a shift in demand, with a need for professionals who can effectively bridge the gap between human judgment and AI capabilities.

A Future Courtroom Scene

Imagine a courtroom of the future. The judge sits before a large holographic display, showcasing key evidence analyzed by AI. Lawyers use AI-powered tools to cross-examine witnesses, highlighting inconsistencies and presenting compelling arguments supported by AI-driven legal research. The AI system summarizes complex legal arguments in real-time, ensuring everyone understands the core issues. The judge receives AI-generated reports on the credibility of witnesses and the strength of the evidence presented, aiding in impartial decision-making. While human judgment remains central, AI assists in streamlining the process, ensuring fairness, and accelerating the delivery of justice.

End of Discussion

The integration of generative AI into the legal system is a double-edged sword. While offering incredible potential for efficiency and improved access to justice, it also presents significant ethical challenges that demand careful consideration. From mitigating bias in algorithms to ensuring transparency and accountability, the responsible development and deployment of this technology are paramount. The future of law hinges on our ability to harness the power of generative AI while safeguarding its inherent risks. The journey ahead requires thoughtful dialogue, robust regulations, and a commitment to ethical practices to ensure a just and equitable legal system for all.