Elon musk x checkmarks terrorist groups – Elon Musk x Checkmarks: Terrorist Groups? The question hangs heavy in the air. Since Musk’s takeover of X (formerly Twitter), content moderation has become a chaotic free-for-all, raising serious concerns about the platform’s role in the spread of extremist ideologies. The verification system, once a symbol of authenticity, now seems entangled in a web of questionable accounts, potentially aiding terrorist groups in their recruitment and propaganda efforts. This isn’t just a tech story; it’s a battleground for free speech, safety, and the very future of online discourse.

The shift in X’s content moderation policies has been dramatic. The loosening of restrictions, coupled with the often unpredictable application of the verification system, has created an environment ripe for the spread of misinformation and harmful content. This contrasts sharply with the more stringent approaches of other major social media platforms, highlighting the unique challenges X faces in balancing free speech with the need to protect its users.

Elon Musk’s Acquisition of X (formerly Twitter) and its Impact on Content Moderation

Elon Musk’s October 2022 acquisition of Twitter, now rebranded as X, marked a seismic shift in the platform’s approach to content moderation. His stated commitment to “free speech absolutism” immediately raised concerns about the potential for increased misinformation, hate speech, and extremist content to proliferate. The subsequent changes implemented have been dramatic, sparking both fervent support and widespread criticism.

The changes in content moderation policies since Musk’s takeover have been significant and, arguably, inconsistent. Initially, a large portion of the content moderation team was laid off, resulting in a perceived weakening of enforcement mechanisms. Simultaneously, Musk implemented changes to the platform’s algorithms and policies, seemingly prioritizing reach and engagement over safety. This has led to a noticeable increase in the visibility of previously suppressed content, including controversial opinions and previously banned accounts. While some argue this fosters open dialogue, others fear it undermines the platform’s responsibility to protect users from harmful content.

Changes in Content Moderation Policies

The most significant change has been the reduction in the enforcement of existing rules against hate speech, misinformation, and harassment. Previously, Twitter had a relatively robust system for flagging and removing such content, often employing a combination of automated tools and human review. Under Musk’s leadership, the emphasis on human moderation has drastically diminished, leaving automated systems, often prone to errors, to bear the brunt of content control. This has led to inconsistencies in enforcement and a perception that certain types of content are being prioritized over others. For example, while some previously banned accounts have been reinstated, others remain suspended, seemingly without clear justification.

Impact on the Spread of Misinformation and Extremist Content

The relaxed content moderation policies have arguably contributed to an increase in the spread of misinformation and extremist content on X. Studies by independent researchers have shown a correlation between the changes and a rise in the visibility of false narratives, conspiracy theories, and hate speech. The absence of robust fact-checking mechanisms and the reinstatement of previously banned accounts associated with extremist groups have exacerbated this issue. The lack of consistent enforcement creates a breeding ground for harmful content, making it more difficult for users to discern credible information from disinformation. The algorithmic changes, which prioritize engagement, have inadvertently amplified the reach of such content, further contributing to its spread.

Comparison to Other Major Social Media Platforms

Compared to other major social media platforms like Facebook, Instagram, and YouTube, X’s current content moderation approach stands out for its comparatively hands-off approach. These platforms, while facing their own criticisms regarding content moderation, generally maintain more active and comprehensive systems for identifying and removing harmful content. They often employ large teams of human moderators alongside sophisticated AI-powered tools. While the specifics of their approaches vary, they generally place a greater emphasis on proactively identifying and addressing potentially harmful content than X currently does. The difference is significant, suggesting that X prioritizes free speech over safety to a greater degree than its competitors.

A Hypothetical Content Moderation Strategy for X

A balanced content moderation strategy for X would require a multi-pronged approach. It would need to incorporate a robust system of automated content detection alongside a smaller, but highly trained, team of human moderators specializing in identifying nuanced forms of hate speech and misinformation. This team could focus on reviewing flagged content and addressing appeals. Transparency is crucial; the platform should clearly define its content moderation policies and provide mechanisms for users to report violations. Furthermore, the algorithm should be adjusted to prioritize accuracy and safety over pure engagement, minimizing the amplification of harmful content. Independent fact-checking organizations could also play a role in verifying information and providing context. This approach seeks to balance the principles of free speech with the responsibility to protect users from harmful content.

The Role of Verification (Checkmarks) on X and its Association with Terrorist Groups

Source: bwbx.io

Elon Musk’s chaotic reign over the blue checkmarks has raised serious concerns, especially regarding the potential for terrorist groups to exploit the verification system. This whole mess highlights the urgent need for robust AI regulation, a need made even clearer by reading this article on Joe Biden’s big AI plan, which, frankly, sounds scary but lacks bite.

Ultimately, the Musk-induced chaos underscores the dangers of unchecked technological power and the critical importance of proactive AI governance to prevent future crises.

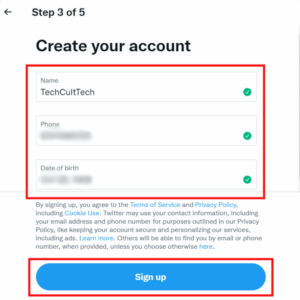

The blue checkmark on X, formerly Twitter, once signified verified accounts belonging to notable individuals and organizations. Its purpose was to authenticate identity and combat impersonation, thereby enhancing trust and reducing the spread of misinformation. However, Elon Musk’s changes to the verification system, including the paid verification program, have significantly altered its function and introduced new vulnerabilities, particularly regarding the proliferation of extremist content.

The verification system’s intended purpose was straightforward: to distinguish genuine accounts from fakes. This was crucial for preventing the spread of false information and protecting users from scams and malicious actors. However, the shift to a paid verification model has arguably diluted this original purpose, leading to a situation where verification no longer guarantees authenticity or trustworthiness. The ease of obtaining a checkmark has made it easier for malicious actors, including those affiliated with terrorist groups, to gain a veneer of legitimacy.

Verified Accounts Used to Promote or Support Terrorist Groups

Several instances have emerged where verified accounts, both before and after the changes to X’s verification system, have been used to promote or support terrorist groups. While specific examples are often swiftly removed by platform moderators, the very existence of such accounts highlights a critical flaw in the system. The ease of obtaining verification, coupled with the platform’s struggle to effectively moderate content at scale, creates a fertile ground for extremist groups to operate. The speed at which such accounts are created and the relative ease of circumventing detection present significant challenges. For example, accounts may use subtle messaging, coded language, or seemingly innocuous content to evade detection, while simultaneously connecting with potential recruits and spreading propaganda.

Terrorist Group Use of Social Media for Recruitment and Propaganda, Elon musk x checkmarks terrorist groups

Terrorist organizations actively utilize social media platforms, including X, for recruitment and propaganda dissemination. They leverage the platforms’ reach and accessibility to broadcast their messages to a wide audience, bypassing traditional media gatekeepers. This includes the use of videos, images, and text-based posts designed to attract new members, justify their actions, and spread fear and intimidation. Recruitment strategies often target vulnerable individuals, exploiting grievances and offering a sense of belonging and purpose. Propaganda efforts aim to legitimize their cause, demonize opponents, and inspire violence. The decentralized nature of social media makes it difficult to monitor and control the spread of such content.

Challenges in Identifying and Addressing Terrorist Content

Social media companies face numerous challenges in identifying and addressing terrorist content. The sheer volume of content uploaded daily makes manual moderation impractical. Moreover, terrorist groups constantly adapt their tactics, employing sophisticated methods to evade detection, such as using coded language, employing bots to amplify their messages, and utilizing multiple accounts to avoid bans. The international nature of these groups also complicates efforts, as different countries have varying laws and regulations regarding terrorist content. The difficulty in accurately identifying and flagging such content, combined with the constant evolution of methods used by terrorist groups, presents an ongoing challenge that requires significant resources and technological innovation to address.

The Spread of Extremist Ideologies and Propaganda on X

The acquisition of X (formerly Twitter) by Elon Musk sparked considerable debate regarding content moderation and the platform’s role in the dissemination of extremist ideologies. While X has taken steps to address the issue, the sheer scale and sophistication of terrorist propaganda campaigns present a significant ongoing challenge. Understanding the methods employed by these groups and the effectiveness of their strategies is crucial to mitigating the threat.

The effectiveness of extremist propaganda on X hinges on its ability to circumvent content moderation efforts while simultaneously reaching a wide and receptive audience. This requires a multi-pronged approach, combining strategic use of hashtags, sophisticated networking, and the exploitation of platform features.

Terrorist Group Strategies for Disseminating Messages on X

Terrorist groups utilize various tactics to spread their messages on X, often adapting to the platform’s evolving policies and algorithms. These strategies include the creation of seemingly benign accounts that gradually introduce extremist viewpoints, the use of coded language and imagery to evade detection, and the strategic deployment of propaganda within seemingly innocuous conversations. They leverage trending hashtags and news events to increase visibility, and utilize bot networks to amplify their reach and create an illusion of widespread support. Furthermore, the anonymity offered by the platform, at least partially, can be exploited to shield identities and avoid accountability.

Effectiveness of Strategies in Recruitment and Ideological Spread

The effectiveness of these strategies is demonstrably high. The ability to target specific demographics through tailored messaging, coupled with the immediate feedback and engagement mechanisms of X, creates a powerful echo chamber. The constant reinforcement of extremist narratives, combined with the social validation provided by online communities, can be highly effective in radicalizing individuals and recruiting new members. The ease with which individuals can connect with like-minded individuals online significantly lowers the barrier to entry into extremist groups. Studies have shown a correlation between increased exposure to online extremist content and radicalization, particularly among vulnerable individuals.

Prevalence of Extremist Content on X Compared to Other Platforms

While direct comparisons across platforms are difficult due to varying methodologies and reporting standards, X has historically been identified as a significant platform for the spread of extremist content. Its large user base and global reach make it an attractive target for terrorist organizations seeking to disseminate their propaganda. While other platforms like Facebook and YouTube have also faced challenges with extremist content, the decentralized nature of X, combined with its relatively less stringent content moderation policies (at certain points in time), may have contributed to its higher prevalence. The shift in moderation policies under Musk’s ownership has led to significant changes in the prevalence of extremist content, although the exact impact is still being studied and debated by researchers.

Methods of Spreading Propaganda Online

| Method | Description | Effectiveness | Example |

|---|---|---|---|

| Coded Language & Imagery | Using subtle symbols and metaphors to avoid detection by algorithms. | High, particularly in the early stages of engagement. | Using seemingly innocuous images to represent violent acts or extremist ideologies. |

| Hashtag Manipulation | Leveraging trending hashtags to increase visibility and reach a wider audience. | Moderate to High, depending on the trend’s relevance and reach. | Using a popular political hashtag to subtly insert extremist views. |

| Bot Networks | Utilizing automated accounts to amplify messages and create an illusion of widespread support. | High, especially for creating artificial trends and influencing online narratives. | Creating numerous fake accounts to retweet and like extremist content. |

| Targeted Advertising | Using paid advertising to reach specific demographics with tailored messages. | High, allowing precise targeting of vulnerable individuals. | Paying for ads promoting extremist ideologies to specific age groups or locations. |

Counter-Terrorism Strategies and Responses on X

The acquisition of X (formerly Twitter) by Elon Musk ignited a fierce debate about content moderation and its role in combating terrorism. While the platform’s approach has been criticized for its perceived laxity, X, like other social media giants, employs various strategies to identify and remove terrorist content, balancing free speech with the urgent need to prevent the spread of extremism. These strategies are constantly evolving in response to the ever-changing tactics of terrorist organizations.

The effectiveness of these strategies is a complex issue, influenced by technological advancements, legal frameworks, and the sheer volume of content generated daily. There’s no single silver bullet; instead, a multi-pronged approach is necessary.

Examples of Counter-Terrorism Strategies Implemented by X and Other Social Media Platforms

X, along with platforms like Facebook and YouTube, utilizes a combination of automated tools and human review to combat terrorist content. Automated systems scan for s, hashtags, and images associated with terrorist groups. Human moderators then review flagged content to determine whether it violates platform policies. Furthermore, these platforms often work with law enforcement agencies and counter-terrorism experts to identify and address emerging threats. For instance, they might collaborate on identifying and taking down accounts linked to specific terrorist organizations or campaigns. This collaborative approach is crucial for effective counter-terrorism efforts online.

Best Practices for Identifying and Removing Terrorist Content from Online Platforms

A robust counter-terrorism strategy necessitates a multi-layered approach. Best practices include proactive content scanning using advanced algorithms that identify subtle indicators of extremist ideologies. This goes beyond simple searches to include contextual analysis and image recognition. Human review remains essential, particularly for nuanced cases requiring careful consideration of context and intent. Furthermore, robust reporting mechanisms empower users to flag suspicious content, enabling faster response times. Transparency in platform policies and enforcement is also crucial to build user trust and encourage reporting. Finally, consistent updates to algorithms and policies are needed to adapt to the evolving tactics of terrorist groups who constantly seek to circumvent detection.

Effectiveness of Different Counter-Terrorism Strategies in Mitigating the Spread of Extremist Ideologies

The effectiveness of counter-terrorism strategies varies. While automated systems are effective in identifying and removing a significant volume of easily detectable content, they struggle with more sophisticated forms of propaganda or content that uses coded language. Human review, although resource-intensive, is vital for addressing such complex cases. Collaboration with law enforcement and counter-terrorism experts provides valuable intelligence and facilitates the takedown of networks involved in spreading extremist ideologies. However, the “cat and mouse” game continues, with terrorist groups constantly adapting their tactics to evade detection. Measuring the effectiveness precisely is challenging, but metrics such as the number of accounts suspended, pieces of content removed, and disruption of terrorist networks provide some indication of success. The effectiveness is also judged by the reduction in the reach and impact of extremist messaging.

Ethical Considerations Involved in Balancing Free Speech with the Need to Counter Terrorism

Balancing free speech with the imperative to counter terrorism presents a significant ethical challenge. The removal of content, even if deemed terrorist-related, raises concerns about censorship and the potential for abuse. Platforms must establish clear, transparent, and consistently applied policies that respect due process and minimize the risk of suppressing legitimate expression. Independent oversight mechanisms could help ensure accountability and prevent arbitrary content removal. The challenge lies in creating a system that effectively combats terrorism while safeguarding fundamental rights and preventing the chilling effect on free speech. This requires a delicate balance and ongoing dialogue between policymakers, technology companies, and civil society organizations.

The Legal and Ethical Implications of Content Moderation on X

Navigating the digital Wild West: Elon Musk’s X and its struggle to balance free speech with the urgent need to combat terrorism presents a complex legal and ethical minefield. The sheer volume of content, coupled with the global reach of the platform, makes consistent moderation a herculean task, fraught with potential pitfalls. This section explores the legal and ethical tightrope walk X, and other social media companies, must constantly negotiate.

Legal Challenges Faced by Social Media Companies in Moderating Terrorism-Related Content

Social media platforms face a barrage of legal challenges when attempting to moderate content related to terrorism. Laws vary wildly across jurisdictions, making a consistent global approach nearly impossible. In some countries, broad restrictions on free speech allow for the removal of content that might be considered offensive or harmful, even if it doesn’t directly incite violence. Conversely, other nations prioritize free expression, even if it means tolerating potentially dangerous viewpoints. This creates a legal quagmire for companies like X, forcing them to make difficult decisions about what content to remove and where to draw the line, constantly risking lawsuits from either side. The threat of legal action, coupled with the sheer scale of content to monitor, creates a significant hurdle in effective moderation. Furthermore, the speed at which terrorist groups adapt their tactics and messaging online often outpaces the legal and technical capabilities of platforms to respond effectively.

Ethical Dilemmas in Defining and Addressing Terrorist Content

Determining what constitutes “terrorist content” is inherently subjective and ethically complex. Where does inflammatory rhetoric end and incitement to violence begin? How do you account for satire, parody, or commentary that might be misinterpreted? The line between protected free speech and dangerous propaganda is often blurry, forcing moderators to make difficult judgments with potentially far-reaching consequences. The risk of censorship is ever-present; overly aggressive moderation can stifle legitimate dissent or critical discussion, while overly permissive policies can enable the spread of harmful ideologies. This constant balancing act necessitates a nuanced understanding of context, intent, and the potential impact of each piece of content. The ethical implications are profound, especially when considering the potential for misidentification and the impact on individuals or groups wrongly labeled as promoting terrorism.

Comparison of Legal Frameworks Governing Content Moderation Across Countries

The legal landscape governing online content moderation varies significantly across countries. Some nations, like those in Europe, have established comprehensive data protection laws and regulations that influence how social media platforms handle user data and content moderation. Other countries, particularly those with less robust legal frameworks, may offer less protection for users and less regulatory oversight for platforms. This creates a complex patchwork of rules and regulations that social media companies must navigate, making a uniform approach to content moderation practically impossible. The differing legal standards can lead to conflicts, with content deemed acceptable in one country potentially illegal in another, forcing companies to grapple with jurisdictional complexities and potential legal repercussions.

Hypothetical Scenario: Free Speech vs. Terrorism Content Removal

Imagine a scenario where a user on X posts a video seemingly advocating for a violent overthrow of the government, but couched in allegorical language and presented as a work of political satire. While the video doesn’t explicitly call for violence against specific individuals or groups, its imagery and tone are clearly provocative and could be interpreted as inciting unrest. X faces a dilemma: removing the video would be seen as censorship by some, violating principles of free speech. However, failing to remove it risks enabling the spread of potentially dangerous ideologies and potentially inciting real-world violence. The platform must weigh the potential harm caused by the content against the potential harm caused by suppressing it, considering the legal implications within its various operating jurisdictions, and attempting to establish a transparent and consistent process for decision-making, all while operating under immense public scrutiny.

The Impact of Algorithmic Amplification on the Spread of Extremist Content: Elon Musk X Checkmarks Terrorist Groups

Source: justjared.com

Social media algorithms, designed to keep users engaged, can inadvertently become powerful tools for spreading extremist content. The very features intended to personalize the user experience – showing them more of what they already like – can create echo chambers that reinforce radical views and limit exposure to diverse perspectives. This amplification effect is a significant concern, particularly given the potential for rapid dissemination of misinformation and hate speech.

Algorithms on platforms like X (formerly Twitter) operate by prioritizing content deemed likely to generate engagement, often measured by metrics like likes, shares, and retweets. This creates a feedback loop: the more engagement a piece of extremist content receives, the more likely the algorithm is to show it to other users, regardless of its factual accuracy or potential harm. This process, often unconscious and unintended, can significantly accelerate the spread of harmful ideologies.

Algorithmic Biases Favoring Extremist Viewpoints

Several inherent biases within algorithms can unintentionally promote extremist viewpoints. For instance, algorithms may struggle to differentiate between passionate advocacy and hate speech, especially when the latter is cleverly disguised or uses coded language. Furthermore, the sheer volume of data processed can make it difficult to detect subtle patterns of extremist recruitment or propaganda. The emphasis on engagement metrics can also lead to the prioritization of sensational or shocking content, a tactic often employed by extremist groups to gain attention and recruits. A classic example is the rapid spread of conspiracy theories, which often generate high engagement due to their provocative nature, regardless of their lack of factual basis.

Mitigation Strategies Through Algorithmic Changes

To mitigate the spread of extremist content, several algorithmic changes could be implemented. Firstly, a shift away from solely engagement-based metrics is crucial. Algorithms should incorporate factors such as the credibility of the source, the factual accuracy of the information, and the potential for harm. This could involve integrating fact-checking tools and incorporating signals from trusted sources into the ranking system. Secondly, improved detection mechanisms are necessary. This requires investment in advanced AI and machine learning techniques capable of identifying subtle indicators of extremist content, including coded language, symbols, and patterns of behavior within user networks. Finally, algorithms could be designed to actively promote diverse viewpoints and counter-narratives, ensuring users are exposed to a broader range of perspectives beyond their existing echo chambers.

An Alternative Algorithm Prioritizing Factual Information

An alternative algorithm could prioritize factual information by incorporating several key elements. First, it would assign higher ranking to content from verified sources, such as established news organizations and academic institutions. Second, it would utilize fact-checking databases and integrate real-time verification systems to flag potentially false or misleading information. Third, the algorithm would consider the overall context of a post, analyzing its relationship to other content and user interactions to identify patterns of misinformation or hate speech. Finally, it would employ a “decay” function, reducing the prominence of older content, particularly if it has been subsequently debunked or flagged as problematic. This approach aims to reduce the amplification of misinformation while promoting more reliable and trustworthy information. This contrasts sharply with current algorithms that often prioritize novelty and immediate engagement, inadvertently boosting the spread of sensational, yet often false, claims.

End of Discussion

Source: knowtechie.com

The intersection of Elon Musk’s X, its verification system, and the activities of terrorist groups presents a complex and evolving challenge. While the pursuit of free speech is paramount, the potential for misuse and the real-world consequences of unchecked extremist content demand a more nuanced and effective content moderation strategy. The future of X, and indeed the online landscape, hinges on finding a delicate balance – one that safeguards free expression while actively combating the spread of hate and violence. The ongoing debate is far from over, and the stakes are undeniably high.